Consulting, Dynamics AX Setup and Configuration

Walkthrough Deep Dive into Understanding Dynamics Operations (V7) Architecture Part 1

Disclaimer: This series contains tips and tricks that I will introduce, which are not officially supported by Microsoft and are mine alone. That being said, I’ve had the time to notice some very interesting repeating patterns with Dynamics Operations deployments. To ensure that we understand how to get the most out of our Dynamics Operation Deployments, we need to have a strong understanding of the current advantages and challenges with the current cloud (rapidly evolving hybrid) approach for our ERP systems. Let’s start with a high level post first explaining fundamentals of our new environments.

SUMMARIZING WHAT HAS HAPPENED IN A FEW SHORT SENTENCES:

We can no longer make the assumption that every application will need to be built to run on Windows. Applications need to be able to run on mobile devices, servers, desktops, netbooks, tablets, laptops, scanners, ect… This has presented quite a challenge. Either make the applications platform independent or risk pulling a ‘Blackberry’ and becoming MBA Fodder for years to come as another sad example of a company that failed to recognize changing times.

Okay great, platform independent applications that can run everywhere à

hallelujah!!!!!

You see what gets everyone so excited. Platform independent applications means that we can now run more applications than ever – our cell phone can become a powerful business vehicle (like all the people using their smart phones to take credit cards) or we can now use that super niche BI application or warehouse application to give our company some serious competitive advantage.

But Wait, what is the secret sauce to getting all applications to talk to one another???

Something that is used more commonly than anything else – the http protocols and security. Http security derivatives tend to be the most commonly accepted standard because the largest network in the world (AKA the internet) is built on HTTP. So, with our cloud applications, we look heavily towards IIS hosted applications in the windows world that can communicate via HTTP security protocols though windows is not a prerequisite for HTTP security protocols.

And now comes the Security GOTCHA:

There are several ways to communicate with security in regards to HTTP. In fact, what we really have are what we call endpoints. EndPoints determine the type of security, the standards used for that security, the performance, ect. It’s critical to understand our endpoints to see the best ways to maximize our new cloud/On-Site architecture.

Let’s start by seeing what I’m talking about.

Here I have a “Brandon Tailored” version of the Dynamics Operations Machine.

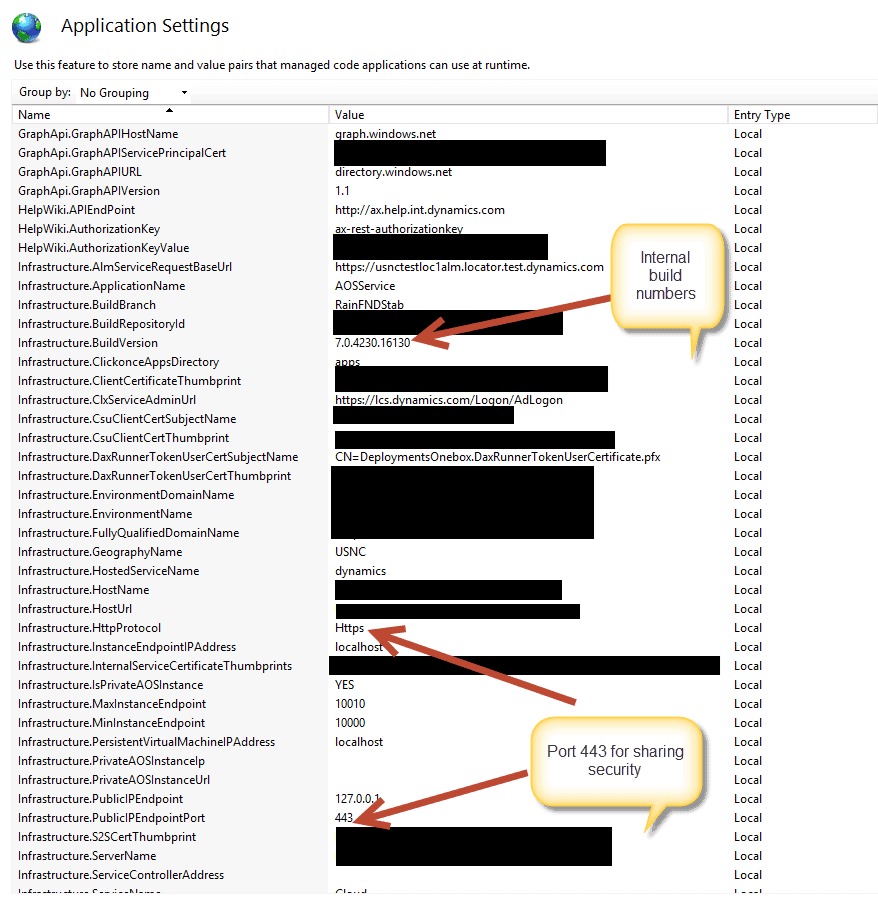

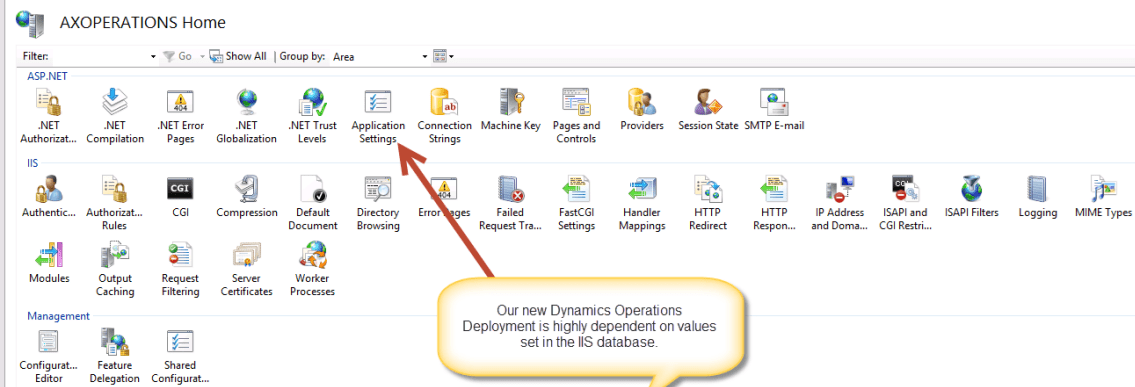

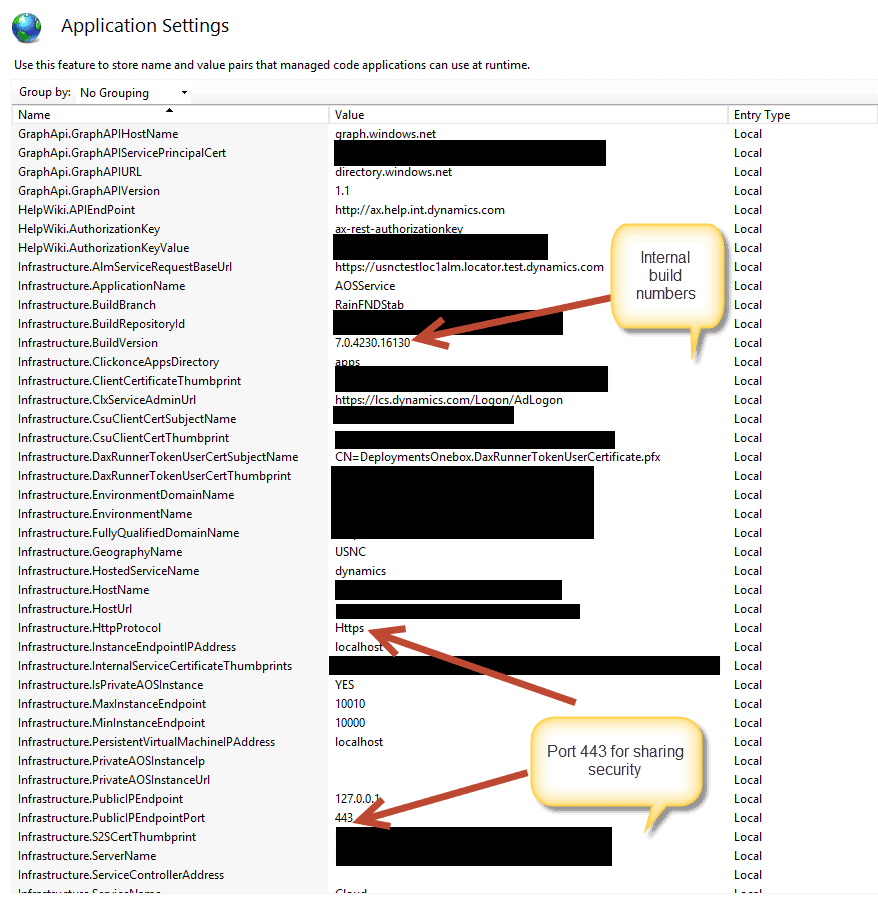

Now, I’m going to bring up IIS Manager from a Dynamics Operations Machine. Then, let’s click on Application Settings.

Notice that there are a ton of important settings.

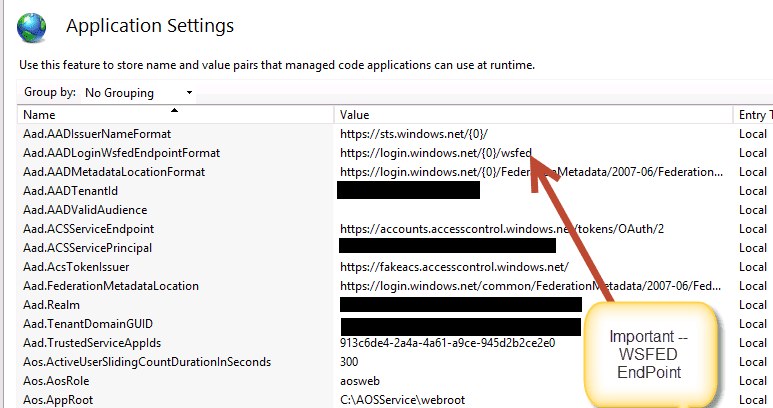

And a few more settings.. I can’t show them all because there are too many to show. But what you should notice is that Dynamics Operations is basically setup as hosted IIS Web Site (aka Web application). It uses the modern protocols of HTTP authentication by making a call out to

Azure Active Directory and using Lifecycle Services as an authorization application for making sure that users are licensed for AX access. Yes, someone could easily game this mechanism by changing the URL’s in the APP Settings but Microsoft maintains a zero access production environment policy which prevents this. In other words, the IIS settings are highly protected by policy. The zero access Production Environment policy is a double-edged sword. It protects the licensing model at the expense of causing a number of technical practices to decline in productivity from their on-premise counterparts.

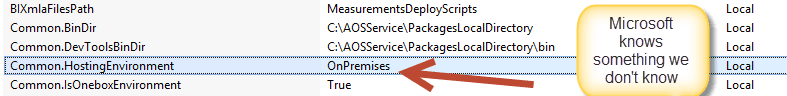

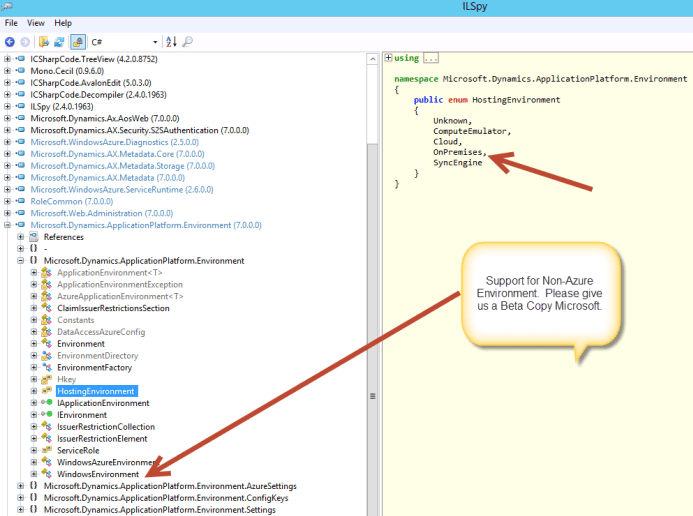

As I said, lots of interesting settings that you can have fun with here.. For example, the on-premise support for the application has actually progressed a lot more than Microsoft has announced. There are other little on-premise hooks about this build also but that is a subject for Microsoft to address. There is just some very interesting code..

Lol.. and something else..

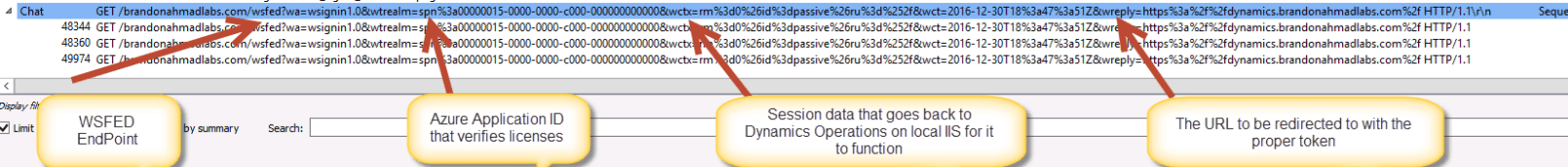

Now, going back to the issue. Let’s get a look at Wireshark to demonstrate what happens whenever we authenticate an application. I happen to love Wireshark. With a couple of small filters and a tweak for capturing the key, you can decrypt the security certificates and get the information you need to troubleshoot. Fiddler does something like this also but Wireshark is more powerful. Fiddler is easier to use. So, looking at our Wireshark trace… Let’s break down what happens.

- User enters a URL(Request) that corresponds to some location with Microsoft hosting an AOS Web Application in IIS

- The Hosted AOS Application redirects to a Trusted Identity Provider URL, which goes to Azure Active Directory

- The Identity Provider Application (Azure Hosting Web Application) sends a page back to the user asking the user to authenticate himself (or information can be passed another way, say a cookie or something in the login URL)

- The user authenticates himself some sort of way (for example, entering a login)

- Azure Active Directory passes the authorization code to a LifeCycle Services which will authorize the user for Dynamics AX Access

- Once authorized and authenticated, a token is issued back to the user with the underlying information including the authorization data for the Dynamics Applications

- The User then goes back to the Application but with the token in hand and presents it

- The hosted application validates the token and allows the user to access it’s resources

Now, going over the Wireshark trace..

- First notice the “WSFED” part of the URL. It tells us that we are using WSFED endpoints for authentication. This isn’t the most secure protocol but it has been around forever and is one of the easiest to use. We see that we are using version 1.0 for Dynamics Operations.

- Second, notice the WtRealm – which is really the ApplicationID in Azure which corresponds to authorizing the user

- Third, notice the wctx – this contains session data related to the request. You can pass extra information in here if you like.

- Finally, the reply address, which is the location to be redirected to with the proper token.

So, WHAT KINDS OF THINGS CAN HAPPEN – EVERYTHING!!!!!!! But this is what is most common:

- A user is not officially authorized for the lifecycle services application with that specific SPN

- The reply address which is the IIS application URL has not been authorized in lifecycle services (note: changing this and setting up a hybrid Azure authentication would give you the ability to create an on-premise host)

- A user can’t federate in the first place and even get an authentication – active directory from company is not setup for authentication in Azure

- A third party application just won’t work period – some applications don’t even support WSFED endpoints. Some government security regulations have requirements that aren’t possible via WSFED (translation: check and verify before buying)

- Somebody have to go through Skype Online, GitHub, ect just to be able to do integrations – Incorrect and improper federation requests

- You can’t do any on-premise development and have to host bunches of expensive cloud machines for development resulting in very slow code moves that have to go to lifecycle services and back (blog post later on this one). Incorrect ADFS setup introducing delays in development and the build process.

What have we learned:

Other than the fact that an examination of Dynamics Operations architecture is a lot more on-premise than Microsoft has announced, we can see the extensible architecture. We’ve gone underneath the hood and really examined the underlying mechanism powering the extensibility. It offers advantages in a decoupling from host operating systems, which introduces far more potential applications due to a more rapid development cycle. However, it also offers all the challenges that browser based application development entails. Setup incorrectly, we could be in a nightmare situation of having to use Services like having to do OneDrive just to do an integration. HTTP based authentication models to this scale are the way of the future, but what will be more interesting, is to see just how many on-premise practices will be included. I’ll bring up several of them in later posts.

Take Care..

10943

10943